Table of contents

- History of the Sitemap.xml Protocol

- Sitemap – What Exactly Is It?

- Contents of the Sitemap.xml File

- Sitemap – XML or HTML

- The Benefits of Making a Sitemap for Web Crawlers

- How to Create a Sitemap

- How to Create a WordPress Sitemap

- Sitemap Do’s and Don’ts

- I have sitemap.xml, what next?

- Sitemap.xml – what else is worth remembering about?

Good spatial orientation in the wild is not only a desirable attribute for travelers, but also computer programs of a special type – network bots. Unfortunately, most which are crucial for effective site indexing have not been endowed with a sense of navigation and require our help. Enter the sitemap, riding in on a silver stallion. 🙂

Optimizing a website consists of many steps, however, even perfect preparations are of little use if web crawlers don’t reach all the pages in the index… And this is where sitemaps come to the rescue. This inconspicuous XML file is an indispensable guideline which will allow web robots to quickly discover every important element of a page and thus accelerate their indexation and appearance in search results.

History of the Sitemap.xml Protocol

First mention of sitemaps appeared on the Google blog in June 2005.

The main task of sitemaps was providing Google with information about changes on the website and increasing the number of indexed subpages. From a 15-year perspective, we can certainly state the task was successful – sitemap is still fulfilling its original role.

Google did not limit itself to increasing its crawling potential – the sitemap.xml project was granted a Creative Commons licence so that other search engines could use it as well. A year later Yahoo! and Microsoft declared their support for sitemap.xml, and in the following years Ask.com and IBM joined them.

Sitemap – What Exactly Is It?

A sitemap is a list of URLs we want to point out to web crawlers. XML (Extensible Markup Language) is a universal marker language used to describe data in a universal way, accessible from any platform and in any technology used by a given system.

The sitemap should include all addresses that are valid and public – home pages, information pages, category pages, product pages, etc. In the map file you can also – and even should! include addresses of subpages, which are not placed in the menu. The XML language allows describing them precisely, marking important data, like the date of the last modification of the subpage.

This method of presenting information about what is happening on your website allows you to quickly inform web bots about creating new subpages or changing existing ones. Giving crawlers a list of important domain addresses significantly speeds up indexing – the program does not have to look for all the elements on its own, it just uses the ready-made solution.

Contents of the Sitemap.xml File

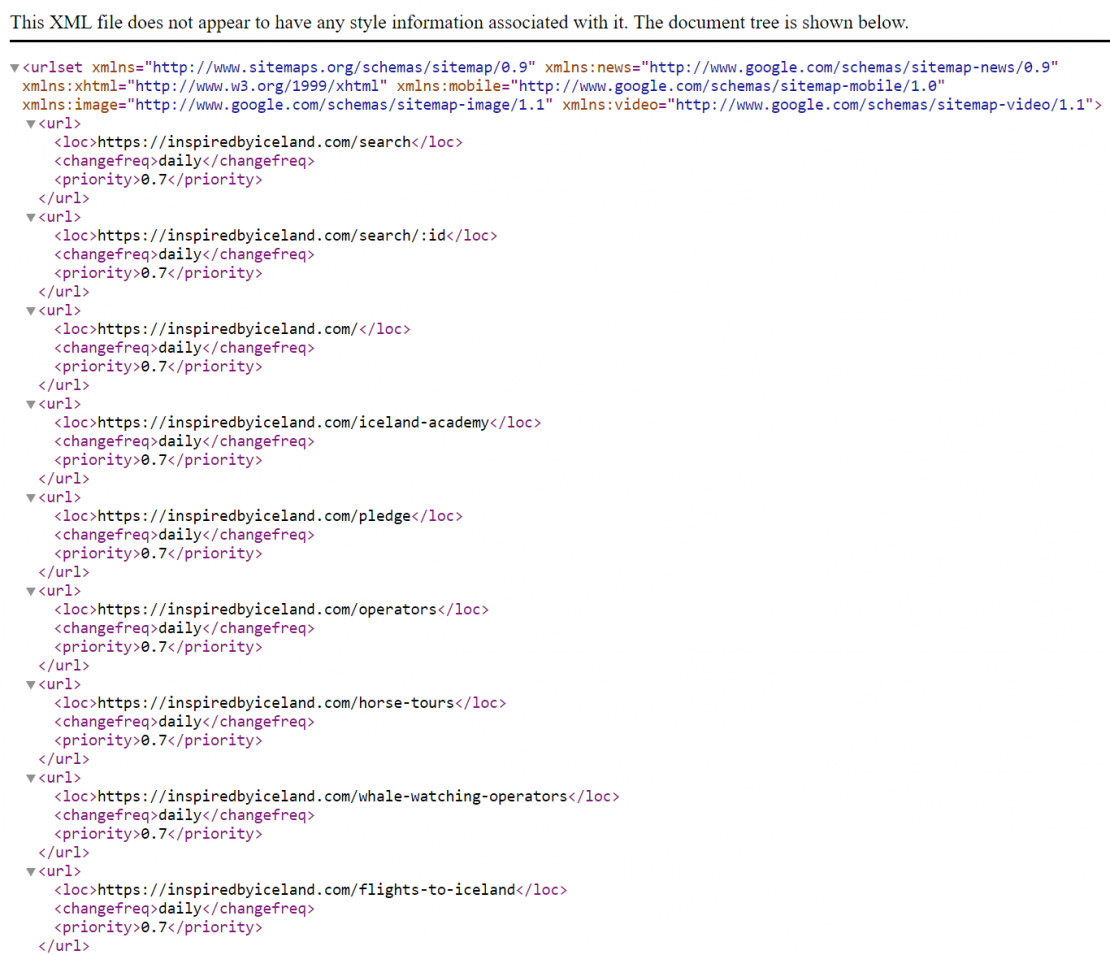

SItemap.xml – as you can see at the top of the screen – doesn’t have display guidelines (as pages created in html and css language), hence its form is a bit unfriendly to the ordinary user.

Above you can see two versions of the same file – sitemap of klodzko.pl website. The first graphic presents the view in the browser, the second one is a preview of the file code. Let’s decode them:

- <?xml version=”1.0″ encoding=”UTF-8″?> – this provides the XML version used to create the file, the second concerns the encoding standard

- <urlset xmlns=”http://www.sitemaps.org/schemas/sitemap/0.9″> – this line opens the tag in which all links contained in the sitemap will be placed.

- <loc> – subpage address

- <lastmode> – additional information, which contains information about the last modification of a document located in a given address.

There can be also other information here:

- <priority> – the content of this tag should be specified in the range 0.0 to 1.0, and its task is to indicate to bots which subpages should be indexed first.

Sitemap – XML or HTML

Sitemap.xml is not the only “sitemap” that can appear within a website. The task of an HTML map is to show the user the way – e.g. by grouping links to the most important products. This solution is most often used by large websites:

These types of maps are created using HTML and CSS. They do not contain additional information, and links are placed in tags that create lists:

Are HTML maps useful? Certainly, they help lost site users get directions. They’re an additional place where links to various categories, tagged with keywords important to us, are located… which can be a nice support for internal linking. Creating a map of this type is worth considering for larger sites, but there’s no such need for smaller ones.

The Benefits of Making a Sitemap for Web Crawlers

The main task of a sitemap file is to show web bots the most important elements on a website. Creating an appropriate file is necessary in several cases:

- The website has many subpages – multi-page websites may have problems with fast indexing – before a robot finds all subpages and indexes them, it may take several weeks. A sitemap indicating key points will shorten this time and place the domain in search results faster.

- The site is new – a fresh domain is usually not very popular among Internet users and doesn’t get many links, which may make it difficult for web crawlers to “discover” it. Adding the site to the Google Search Console and then creating an XML file with a list of all the pages you want to appear in the search engine is the best way to place the site in the search results.

- The website has many “hidden” subpages – some websites do not have an extensive menu and, for various reasons, hide content outside the main categories. For example, categories with archived posts. If these places also hide valuable content, it is worth inviting web bots and allowing them to index this part of the website.

- The website vanished from search results – sometimes a website is de-indexed and does not appear in Google. These types of mistakes happen even to the biggest players – sometimes all it takes is accidentally changing the robots tag to “noindex” to erase the site from the SERPs. In such situations, the speed of reappearance in search results will be important, and a site map (especially if it was not there before) will allow a quick return to previous positions.

For small sites, a sitemap is not necessary – Google will do well with crawling 5-10 tabs. Larger sites, especially those updated frequently, should take care of proper preparation of the file and making it available for bots.

How to Create a Sitemap

Preparing the sitemap.xml file is relatively simple, however, doing it manually may be laborious. Fortunately, most CMS generates maps independently or enables installation of a dedicated plug-in.

Note – the most popular URL where sitemaps are placed is

www.your-website.com/sitemap.xml.

though it is not mandatory. Some automatic audits will consider the lack of the sitemap at this address as an error and inform about missing XML file. However, you should not worry about it. The address where the sitemap is placed can be specified in robots.txt file.

How to prepare a sitemap on a website without CMS? The easiest way is to use one of the generators available online. Before placing it on the site, take the time to review the generated file – the generator, as well as web crawlers, may have problems with finding pages which are not linked in the menu or other places on the site. If they are not in the file, add them manually.

The size of sitemap.xml matters – the maximum size of the sitemap.xml file is 50MB. You also shouldn’t exceed the number of addresses per one map – here the limit is 50 000 records. In cases when the domain consists of many thousands of subpages it is worth splitting the file into several smaller ones and creating a map index. Such solutions are used in WP plug-ins (see below) and store engines, which automatically generate whole sets of sitemaps. Creating an index with smaller maps will allow you to bypass the problem with possible limits and keep a clear file structure.

How to Create a WordPress Sitemap

As with most changes within WordPress, also in this case – there’s a plugin for it! Or actually… a whole range of plugins offered by the most popular SEO plugin providers – Yoast and All-in-One SEO.

Both plugins generate several sitemaps, and directories with the files are available at the following addresses:

- YOAST: /sitemap_index.xml

- All in One SEO: /sitemap.xml

You can add and remove map components in the administrator’s panel in sections concerning maps and indexation.

Sitemap Do’s and Don’ts

Creating a sitemap and making it available for indexing robots is supposed to show strong elements of the website and encourage their placement in search results. With this in mind, it’s worth taking care to keep your sitemap up-to-date and to include information that leads directly to the desired pages in the index. You should avoid:

placing the addresses which return error 404,

placing the addresses which return error 301,302,

non-canonical addresses (which address differs from the address contained in the canonical tag).

Adding such addresses may cause problems with proper evaluation by Google. No wonder – having to go from point A to point B also frustrates us and lowers our trust in those elements, through which we were directed.

It is also not worth placing in the sitemap file addresses blocked for web crawlers (with the <meta name=”robots” content=”noindex” /> tag or blocked in the robots.txt file

I have sitemap.xml, what next?

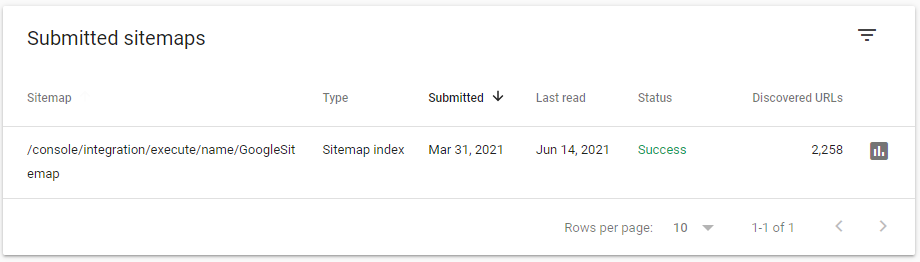

Creating a sitemap.xml file and uploading it to your server is not the end of providing clues for web bots. The map also has its own special place in Google Search Console.

In GSC, go to the “SITE MAPS” tab located in the menu on the left. In the tab, add the map address – paste the URL itself, without the domain address – and click “submit”.

After a moment, the map address will be placed in the “Submitted sitemaps” table, where you’ll also find detailed information about the map – date of submission, last readout, map status and number of detected addresses.

Clicking on the map address will take you to the next screen:

Click “See Index Coverage” for more details:

This is where you’ll find all the information about the indexing of specific subpages, as well as information about problems Googlebot encountered.

Sitemap.xml – what else is worth remembering about?

- Creating a sitemap is not the end – you should update it from time to time, especially after making significant changes to the addresses or structure of the site:

- implementing SSL certificate and switching to https address forces changes in all addresses on the site,

- redirecting or removing individual addresses – it is enough to change their URLs or remove them from the sitemap.xml file

- complete redesign of the website.

Updating sitemap.xml is also one of the actions worth taking after keyword optimization.

Sitemap.xml may not be as colourful as its classic cousins, but it’s definitely worth using!